How we used AI to translate sign language in real time

Imagine a world where anyone can communicate using sign language over video. Inspired by this vision, some of our engineering team decided to bring this idea to HealthHack 2018. In less than 48 hours and using the power of artificial intelligence, their team was able to produce a working prototype which translated signs from the Auslan alphabet to English text in real time.

The problem

People who are hearing impaired are left behind in video consultations. Our customers tell us that, because they can’t sign themselves, they have to use basic text chat to hold their consults with hearing-impaired patients - a less than ideal solution. With the growing adoption of telehealth, deaf people need to be able to communicate naturally with their healthcare network, regardless of whether the practitioner knows sign language.

Achieving universal sign language translation is no easy feat. The dynamic nature of natural sign language makes it a hard task for computers, not to mention the fact that there are over 200 dialects of sign language worldwide. Speakers of American Sign Language (ASL) have been fortunate in that a number of startups and research projects are dedicated to translating ASL in real time.

In Australia however, where Auslan is the national sign language, speakers have not been so fortunate, and there is next to no work being done for the Auslan community. We thought we might be able to help.

Our solution

Our goal was a lofty one - create a web application that uses a computer’s webcam to capture a person signing the Auslan alphabet, and translate it in real time. This would involve:

- Gathering data

- Training a machine learning model to recognise the Auslan alphabet

- Building the user interface

Building the Auslan Alphabet Image Dataset

Machine learning, a branch of artificial intelligence, is the practice of teaching computers how to learn. In general, we do this by giving computers a bunch of examples of “labelled” data - e.g. here is an image, and it is a dog - and tell the computer to find similarities about objects of the same label; a process called “supervised learning”.

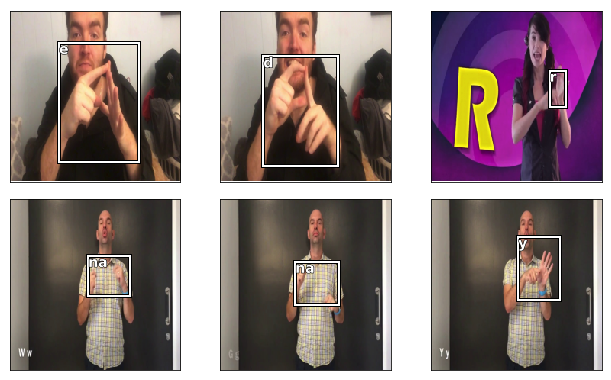

So to train a machine learning model that could recognise the Auslan alphabet, we needed a bunch of images of people signing each letter in the Auslan alphabet, coupled with what English letter each photo represented. Our model also needs to learn where the hands are in the image, and for that, we need to draw bounding boxes around the hands in the image.

This kind of data exists for ASL, but as it turns out, there is no dataset of images of the Auslan alphabet.

So, we made one. We downloaded several videos from YouTube of people demonstrating the Auslan alphabet, extracted every frame from each video, and then manually drew bounding boxes over the hands to mark the letter, one frame at a time - It was exactly as much fun as it sounds! Machine learning models like diverse input data, so we also captured around 700 images of each team member signing the letters at different angles and in different lighting conditions.

Proof of concept

Now that we had some data, we wanted to see if we could actually get something working. Enter YOLO (You Only Look Once) - a popular algorithm for object detection. Clearly not his first rodeo, our team member Michael Swan (Tabcorp) was able to produce a proof-of-concept video that recognised the letters of "HEALTH HACK" after only a few hours of training.

Excuse the poor signing - Tom only learned the signs 10 minutes prior!

[youtube https://www.youtube.com/watch?v=DDGplO5jB4M?rel=0&showinfo=0&w=560&h=315]

Training a machine learning model

A controlled example with limited classes is great, but we wanted to see how far we could get classifying all 26 signs of the Auslan alphabet. For this, we trained our own neural network. Inspired by the mammalian brain, neural networks, in particular, convolutional neural networks, have proven to be very effective at image classification, and our problem was no exception.

Lex Toumbourou (ThoughtWorks), the team’s resident machine learning expert, trained a convolutional neural network using Pytorch (a Python machine learning framework) to predict where the hands were (each point of the bounding box), as well as the class (the letter). A few tweaks here and there and we had a model that could predict signs from the Auslan alphabet with ~86% accuracy. See Lex's research and implementation.

The Final Push

Remember our end goal - translate the Auslan alphabet in real time. Building the first image dataset for the Auslan alphabet and having a model predicting with ~86% accuracy would have been a solid achievement for a hackathon on any given day.

But we wanted to push it even further. We wanted a demo-able application.

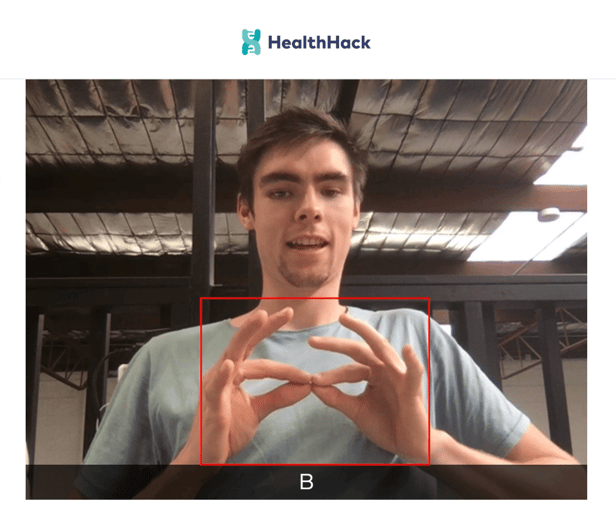

The final 2 components we needed were; a backend service that, given an image of a sign, would return us the predicted letter, and; a front-end that could capture and display video from the user’s webcam and ask the backend for predictions.

For the backend, we wrapped our model up in a Flask app (Python) - a POST request with the image as the payload would return us the 4 points of the bounding box and the class (or letter) of the image. On the client side, we used plain ol' Javascript to capture the users’ webcam with the browser’s getUserMedia method and using an invisible canvas we took a frame from the video every 200ms, requested a prediction, and displayed the results accordingly.

For those interested in the technical details, all of our code is open source on Github for everyone to use and improve.

We were able to build the first Auslan alphabet image dataset, train a machine learning model from scratch, AND make a Python web app which could translate sign language in real time- in one weekend!

Our team

We couldn’t have done it without our amazing team. We thank Lex Toumbourou (Machine Learning Engineer) and Michael Swan (Game developer) for coming together with us and building something incredible over the weekend.

Where to from here

We are super proud of what we achieved over the weekend, but it’s only just the beginning. With a bigger dataset and more tweaking of our models, we believe we could develop accurate and reliable technology for fingerspelling using Auslan.

Of course, sign language involves more than just hands and letters; it incorporates facial expressions and sequences of gestures to form full sentences. While a solution to natural sign language translation is still an open problem, we believe we pushed the needle ever so slightly towards a better experience for the hearing-impaired.

We’d love to learn more about how we can help bring telehealth to the hearing-impaired. If you’re interested in learning more, please reach out us at support@coviu.com